FaceID-GAN1

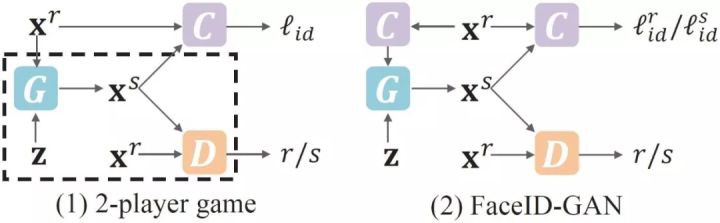

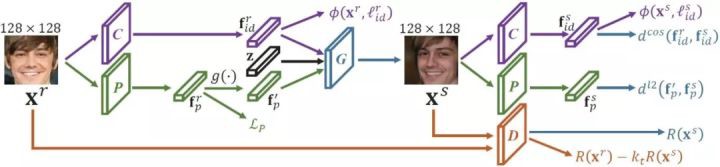

Face synthesis has achieved advanced development by using generative adversarial networks (GANs). Existing methods typically formulate GAN as a two-player game, where a discriminator distinguishes face images from the real and synthesized domains, while a generator reduces its discriminativeness by synthesizing a face of photo realistic quality. Their competition converges when the discriminator is unable to differentiate these two domains. Unlike two-player GANs, this work generates identity preserving faces by proposing FaceID-GAN, which treats a classifier of face identity as the third player, competing with the generator by distinguishing the identities of the real and synthesized faces (see Fig.1). A stationary point is reached when the generator produces faces that have high quality as well as preserve identity. Instead of simply modeling the identity classifier as an additional discriminator, FaceIDGAN is formulated by satisfying information symmetry, which ensures that the real and synthesized images are projected into the same feature space. In other words, the identity classifier is used to extract identity features from both input (real) and output (synthesized) face images of the generator, substantially alleviating training difficulty of GAN. Extensive experiments show that FaceID-GAN is able to generate faces of arbitrary viewpoint while preserve identity, outperforming recent advanced approaches.

FaceFeat-GAN2

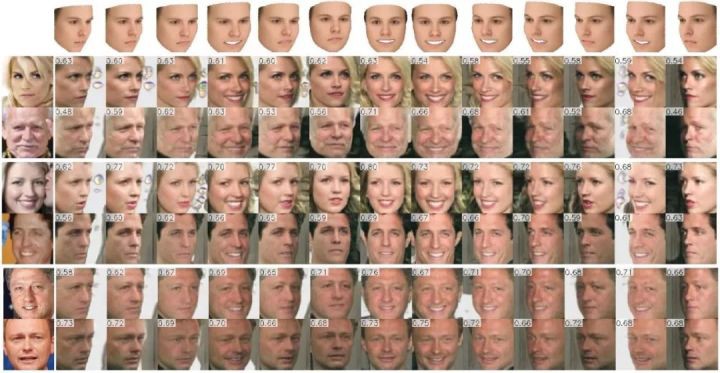

The advance of Generative Adversarial Networks (GANs) enables realistic face image synthesis. However, synthesizing face images that preserve facial identity as well as have high diversity within each identity remains challenging. To address this problem, we present FaceFeatGAN, a novel generative model that improves both image quality and diversity by using two stages. Unlike existing single-stage models that map random noise to image directly, our two-stage synthesis includes the first stage of diverse feature generation and the second stage of feature-to-image rendering. The competitions between generators and discriminators are carefully designed in both stages with different objective functions. Specially, in the first stage, they compete in the feature domain to synthesize various facial features rather than images. In the second stage, they compete in the image domain to render photo-realistic images that contain high diversity but preserve identity. Extensive experiments show that FaceFeat-GAN generates images that not only retain identity information but also have high diversity and quality, significantly outperforming previous methods.

-

Yujun Shen, Ping Luo, Junjie Yan, Xiaogang Wang, Xiaoou Tang, FaceID-GAN: Learning a Symmetry Three-Player GAN for Identity-Preserving Face Synthesis, CVPR 2018 ↩︎

-

Yujun Shen, Bolei Zhou, Ping Luo, Xiaoou Tang, FaceFeat-GAN: a Two-Stage Approach for Identity-Preserving Face Synthesis, arXiv:1812.01288, 2019 ↩︎